This tutorial shows how sound files can be loaded and played.

Version 1: Audio Source, Audio Sequence, Timeline, Listener

Audio Formats

Beside audio sources the Murl engine also places audio sinks (listener) as graphic nodes in its virtual world. By doing so, it is possible to also consider positions while playing audio files. Depending on its position a sound is louder either on the left or on the right speaker and becomes quieter with increasing distance.

- Note

- Only mono sounds are dependent on their position. Stereo sounds, however, are always played with the same volume and according to the left-right-setting defined in the sound file.

The Murl engine supports the audio formats .wav and .ogg with integer values. For testing purposes, we load a mono file and a stereo file from the Freesound database https://www.freesound.org :

https://www.freesound.org/people/HerbertBoland/sounds/33637/https://www.freesound.org/people/THE_bizniss/sounds/39458/

We rename the files and save them into the subfolder sounds located in the resource folder of the project:

data/packages/main.murlres/sounds/sfx_boom_stereo.wavdata/packages/main.murlres/sounds/sfx_laser_mono.wav

In the file package.xml the sound files receive a unique id:

<?xml version="1.0" ?>

<Package id="package_main">

<!-- Sound resources -->

<Resource id="sfx_laser" fileName="sounds/sfx_laser_mono.wav"/>

<Resource id="sfx_boom" fileName="sounds/sfx_boom_stereo.wav"/>

<!-- Graph resources -->

<Resource id="graph_main" fileName="graph_main.xml"/>

<!-- Graph instances -->

<Instance graphResourceId="graph_main"/>

</Package>

Audio

In order to play a audio file, the following steps are necessary:

- Make the audio resource available in the graph (

Graph::AudioSourcenode). - Position the audio source in the virtual world (

Graph::AudioSequencenode). - Create a timeline in order to control the audio source (

Graph::Timelinenode). - Position an audio sink (listener) in the virtual world (

Graph::Listenernode).

The graph node AudioSource creates an instance of the audio resource and adds it to the graph:

<AudioSource id="soundBoom" audioResourceId="package_main:sfx_boom"/> <AudioSource id="soundLaser" audioResourceId="package_main:sfx_laser"/>

The AudioSequence graph node represents an audio source within the virtual world. The attribute audioSourceIds specifies, which sound resource should be used by this audio source.

If separated by a comma, it is possible to reference more than one audio node. These will be played seamlessly one after the other, in the given order:

<AudioSequence

audioSourceIds="soundLaser,soundBoom"

/>

An alternative way to use more than one audio source in the sequence is to explicitly specify a zero-based index for the audioSourceId attribute:

<AudioSequence

audioSourceId.0="soundLaser"

audioSourceId.1="soundBoom"

/>

The sample format of the audio buffer is adopted by default from the first audio file specified via audioSourceIds. Alternatively, the parameter sampleFormat can be used to specify the audio buffer format. Valid parameter values for the sampleFormat attribute are:

These string values directly map to one of the enumeration values from IEnums::SampleFormat.

- Note

- If two or more audio files are played in an

AudioSequence, any differences in the sample resolution (e.g. 16 bit/8 bit) and mode (mono/stereo) are automatically adjusted. However, the sampling rate responsible for the playback pitch remains unaffected, so it is recommended to use the same sampling rate for all of these sounds.

The volume attribute allows adjusting the sound intensity, with useful values ranging from 0.0 to 1.0. The value of the attribute rolloffFactor affects the calculation of the volume. The exact method of calculation is explained in the documentation of the Graph::IListener interface.

We now create an AudioSequence node with only a single audio source to play, encapsulated within a Graph::Timeline node:

<Timeline

id="timelineBoom"

startTime="0.0" endTime="7.5"

autoRewind="yes"

>

<AudioSequence

id="sequenceBoom"

audioSourceIds="soundBoom"

volume="1.0" rolloffFactor="0.0"

posX="0" posY="0" posZ="800"

/>

</Timeline>

The Timeline graph node creates the time section which we want to play our sound in. The attributes startTime and endTime define the part of the sound file we want to play with the value being specified in seconds. By specifying a negative startTime it is possible to delay the starting time of the sound.

In order to create an audio sink, a Listener, a ListenerTransform and a ListenerState are necessary (similar to a camera):

<Listener

id="listener"

viewId="view"

/>

<ListenerTransform

listenerId="listener"

posX="0" posY="0" posZ="800"

/>

<ListenerState

listenerId="listener"

/>

The graph node Listener creates a listener for a certain view. We only define the attribute viewId and use the standard distance model INVERSE_CLAMPED.

With the ListenerTransform node we position the listener within the virtual world and with the ListenerState node we activate the listener for all subsequent audio sources.

Instead of using the standard distance model INVERSE_CLAMPED, it is also possible to choose one of the following distance models:

Similar to the sample formats explained above, these string values also directly map to one of the enumeration values from IEnums::DistanceModel. Again, the exact method of volume calculation is explained in the documentation of the Graph::IListener interface.

The file graph_main.xml contains all nodes used for our program:

<?xml version="1.0" ?>

<Graph>

<View id="view"/>

<Camera

id="camera"

fieldOfViewX="400"

viewId="view"

nearPlane="400" farPlane="2500"

clearColorBuffer="yes"

/>

<CameraTransform

cameraId="camera"

posX="0" posY="0" posZ="800"

/>

<CameraState

cameraId="camera"

/>

<Listener

id="listener"

viewId="view"

/>

<ListenerTransform

listenerId="listener"

posX="0" posY="0" posZ="800"

/>

<ListenerState

listenerId="listener"

/>

<AudioSource id="soundBoom" audioResourceId="package_main:sfx_boom"/>

<AudioSource id="soundLaser" audioResourceId="package_main:sfx_laser"/>

<Timeline

id="timelineBoom"

startTime="0.0" endTime="7.5"

autoRewind="yes"

>

<AudioSequence

id="sequenceBoom"

audioSourceIds="soundBoom"

volume="1.0" rolloffFactor="0.0"

posX="0" posY="0" posZ="800"

/>

</Timeline>

<Timeline

id="timelineLaser"

startTime="0.0" endTime="0.4"

autoRewind="yes"

>

<AudioSequence

id="sequenceLaser"

audioSourceIds="soundLaser"

volume="1.0" rolloffFactor="1.0"

posX="0" posY="0" posZ="800"

/>

</Timeline>

</Graph>

Timeline Node

In order to programmatically play the audio, we create a Logic::TimelineNode object for each Timeline graph node:

Logic::TimelineNode mSFXBoom;

Logic::TimelineNode mSFXLaser;

Bool App::SoundLogic::OnInit(const Logic::IState* state)

{

state->GetLoader()->UnloadPackage("startup");

Graph::IRoot* root = state->GetGraphRoot();

AddGraphNode(mSFXBoom.GetReference(root, "timelineBoom"));

AddGraphNode(mSFXLaser.GetReference(root, "timelineLaser"));

if (!AreGraphNodesValid())

{

return false;

}

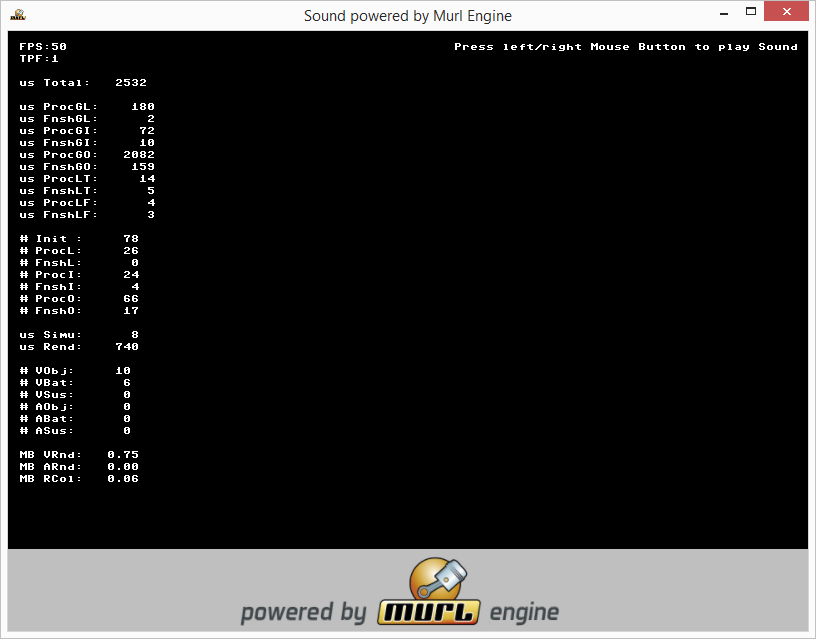

state->SetUserDebugMessage("Press left/right Mouse Button to play Sound");

return true;

}

The Logic::TimelineNode objects are used in the OnProcessTick() method to control the playback. By pressing either the right or the left mouse button the timeline is set to start and the sound is played once again.

void App::SoundLogic::OnProcessTick(const Logic::IState* state)

{

Logic::IDeviceHandler* deviceHandler = state->GetDeviceHandler();

if (deviceHandler->WasMouseButtonPressed(IEnums::MOUSE_BUTTON_LEFT))

{

mSFXBoom->Rewind();

mSFXBoom->Start();

}

if (deviceHandler->WasMouseButtonPressed(IEnums::MOUSE_BUTTON_RIGHT))

{

mSFXLaser->Rewind();

mSFXLaser->Start();

}

if (deviceHandler->WasRawKeyPressed(RAWKEY_ESCAPE) ||

deviceHandler->WasRawButtonPressed(RAWBUTTON_BACK))

{

deviceHandler->TerminateApp();

}

}

If the total duration of the sound files is unknown, it can easily be queried from the AudioSequence node by using the method GetTotalDuration(). The new start and end values can be passed to the Start() method of the Timeline node. If this method is now called with its new start and end values, a Rewind() is called as well.

mTimelineNode->Start(0, mAudioSequenceNode->GetTotalDuration());

Exercises

- Vary the parameters for position, volume and rolloff factor.

- Modify the

OnProcessTick()method so that the end times of theTimelinenodes are retrieved from their correspondingAudioSequencenodes.

Version 2: Structuring

In order to keep track of larger projects, the separation in individual files is recommended. We create the files graph_sound_instance.xml and graph_sounds.xml and specify them in the file package.xml with a unique id:

<?xml version="1.0" ?>

<Package id="package_main">

<!-- Sound resources -->

<Resource id="sfx_laser" fileName="sounds/sfx_laser_mono.wav"/>

<Resource id="sfx_boom" fileName="sounds/sfx_boom_stereo.wav"/>

<!-- Graph resources -->

<Resource id="graph_main" fileName="graph_main.xml"/>

<Resource id="graph_sound_instance" fileName="graph_sound_instance.xml"/>

<Resource id="graph_sounds" fileName="graph_sounds.xml"/>

<!-- Graph instances -->

<Instance graphResourceId="graph_main"/>

</Package>

In the file graph_sound_instance.xml we encapsulate the nodes Timeline, AudioSource and AudioSequence. In order to avoid naming conflicts, we pack these nodes in a Namespace with a custom attribute audioId for its unique ID, and additionally use the custom attributes duration and packageId:

<?xml version="1.0" ?>

<Graph duration="1.0" packageId="package_main">

<Namespace id="{audioId}" >

<AudioSource id="sound" audioResourceId="{packageId}:{audioId}"/>

<Timeline id="timeline" startTime="0.0" endTime="{duration}" autoRewind="yes">

<AudioSequence id="sequence" audioSourceIds="sound" volume="1.0" rolloffFactor="0.0"/>

</Timeline>

</Namespace>

</Graph>

In the file graph_sounds.xml we instantiate the individual sound files using the sub-graph defined in the file graph_sound_instance.xml:

<?xml version="1.0" ?>

<Graph>

<Namespace id="sounds">

<Instance graphResourceId="package_main:graph_sound_instance" audioId="sfx_boom"/>

<Instance graphResourceId="package_main:graph_sound_instance" audioId="sfx_laser"/>

</Namespace>

</Graph>

This file can now be instantiated itself in the file graph_main.xml and therefore added to the graph:

<?xml version="1.0" ?> <Graph> <View id="view"/> <Listener id="listener" viewId="view"/> <ListenerState listenerId="listener"/> <Instance graphResourceId="package_main:graph_sounds"/> <Camera id="camera" viewId="view" fieldOfViewX="400" clearColorBuffer="yes" /> <CameraState cameraId="camera"/> </Graph>

The timeline can then be simply referenced via the namespace path:

AddGraphNode(mSFXBoom.GetReference(root, "sounds/sfx_boom/timeline"));

AddGraphNode(mSFXLaser.GetReference(root, "sounds/sfx_laser/timeline"));